Topic 02.2: Building an NLP Pipeline(PART-2)

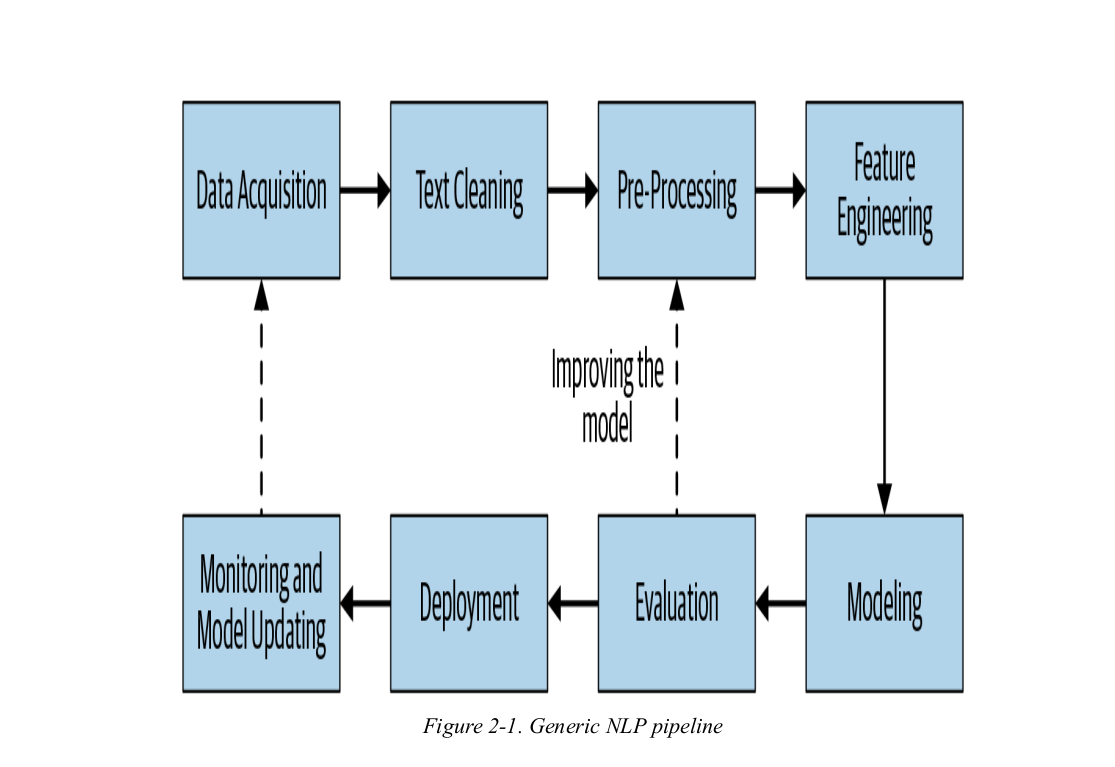

Let's resume our discussion on NLP pipeline. In the previous blog post we completed the first 2 steps of NLP pipeline that is Data aquisition and text cleaning. In this blog post we will cover data pre-processing and feature engineering.

PRE-PROCESSING:

To pre-process your text simply means to bring your text into a form that is predictable and analysable for your task. A task here is a combination of approach and domain. For example, extracting top keywords with TF-IDF (approach) from Tweets (domain) is an example of a Task.

Task = approach + domain

One task’s ideal pre-processing can become another task’s worst nightmare. So take note: text pre-processing is not directly transferable from task to task.

Let’s take a very simple example, let’s say you are trying to discover commonly used words in a news dataset. If your pre-processing step involves removing stopwords because some other task used it, then you are probably going to miss out on some of the common words as you have ALREADY eliminated it. So really, it’s not a one-size-fits-all approach.

Here are some common pre-processing steps used in NLP software:

- Preliminaries:

Sentence segmentation and word tokenization.

- Frequent steps:

Stop word removal, stemming and lemmatization, removing digits/punctuation, lowercasing, etc.

- Advanced processing:

POS tagging, parsing, coreference resolution, etc.

Preliminaries :

While not all steps will be followed in all the NLP pipelines we encounter, the first two are more or less seen everywhere. Let’s take a look at what each of these steps mean.

The NLP can analysis the text by breaking it into sentence(sentence segmentation) and then further into words(words tokenization).

SENTENCE SEGMENTATION :

We may easily divide the text into sentence on the basis of the position of the full stop(.). But what happen if we have Dr.Joy or (….) in our text.

We have NLP libraries which help to overcome these issue. Like NLTK.

import nltk

from nltk.tokenize import sent_tokenize

text = "It's fun to study NLP. Would recommend to all."

print(sent_tokenize(text))

from nltk.tokenize import word_tokenize

text = "It's fun to study NLP. Would recommend to all."

print(word_tokenize(text))

Frequent Steps :

Some frequent steps for pre-processing steps are:

- Lower casing

- Removal of Punctuations

- Removal of Stopwords

- Removal of Frequent words

- Stemming

- Lemmatization

- Removal of emojis

- Removal of emoticons

Well these steps are frequent but they may vary problem to problem. For e.g. let us consider we want to predict whether the given text belong to news, music or any other field. So for this problem we cannot remove the Frequent words like news article might contain the word news in it a lot, Hence to categorize the text we cannot just remove it.

Now you might be wondering what is lemmatization and stemming are. So before we move forward lets understand these terms.

Stemming and Lemmatization helps us to achieve the root forms (sometimes called synonyms in search context) of inflected (derived) words.

Stemming :

Stemming is faster because it chops words without knowing the context of the words in given sentences.

- It is rule-based approach.

- Accuracy is less.

- When we convert any words into root-form then stemming may create the non-existence meaning of a word.

- Stemming is preferred when the meaning of the word is not important for analysis. For e.g in spam detection.

- For example : Studies => Studi

from nltk.stem.porter import PorterStemmer

porter_stemmer = PorterStemmer()

word_data = "Da Vinci Code is such an amazing book to read.The book is full of suspense and Thriller. One of the best work of Dan Brown."

# First Word tokenization

nltk_tokens = nltk.word_tokenize(word_data)

#Next find the roots of the word

for w in nltk_tokens:

print("Actual: %s Stem: %s" % (w,porter_stemmer.stem(w)))

Lemmatization :

Lemmatization is slower as compared to stemming but it knows the context of the word before proceeding.

- It is a dictionary-based approach.

- Accuracy is more as compared to Stemming.

- Lemmatization always gives the dictionary meaning word while converting into root-form.

- Lemmatization would be recommended when the meaning of the word is important for analysis. for example in Question Answer application.

- For Example: “Studies” => “Study”

from nltk.stem import WordNetLemmatizer

wordnet_lemmatizer = WordNetLemmatizer()

word_data = "Da Vinci Code is such an amazing book to read.The book is full of suspense and Thriller. One of the best work of Dan Brown."

nltk_tokens = nltk.word_tokenize(word_data)

for w in nltk_tokens:

print("Actual: %s Lemma: %s" % (w,wordnet_lemmatizer.lemmatize(w)))

Some other pre-processing steps that are not that common are:

Text Normalization:

Text normalization is the process of transforming a text into a canonical (standard) form. For example, the word “gooood” and “gud” can be transformed to “good”, its canonical form. Another example is mapping of near identical words such as “stopwords”, “stop-words” and “stop words” to just “stopwords”.

Language Detection:

Well what happen if our text is in other language apart from English. Then our whole pipeline need to be modified according to that language. So for that we need to detect the language before creating the pipeline. Python provides various modules for language detection.

- langdetect

- textblob

- langrid

from langdetect import detect

# Specifying the language for

# detection

print(detect("Geeksforgeeks is a computer science portal for geeks"))

print(detect("Geeksforgeeks - это компьютерный портал для гиков"))

print(detect("Geeksforgeeks es un portal informático para geeks"))

print(detect("Geeksforgeeks是面向极客的计算机科学门户"))

print(detect("Geeksforgeeks geeks के लिए एक कंप्यूटर विज्ञान पोर्टल है"))

print(detect("Geeksforgeeksは、ギーク向けのコンピューターサイエンスポータルです。"))

Advance Processing

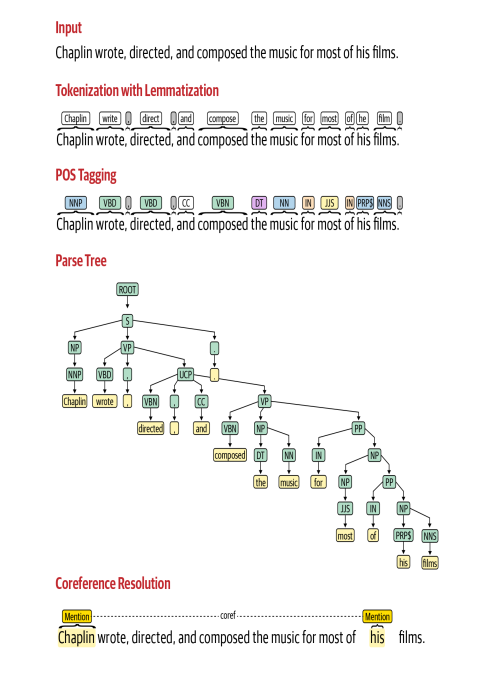

POS Tagging :

Imagine we’re asked to develop a system to identify person and organization names in our company’s collection of one million documents. The common pre-processing steps we discussed earlier may not be relevant in this context. Identifying names requires us to be able to do POS tagging, as identifying proper nouns can be useful in identifying person and organization names. Pre-trained and readily usable POS taggers are implemented in NLP libraries such as NLTK, spaCy and Parsey McParseface Tagger.

tokens = nltk.word_tokenize("The quick brown fox jumps over a lazy dog")

print("Part of speech",nltk.pos_tag(tokens))

Parse Tree:

Now if we have to find the relationship between person and organization then we need to analyse the sentence in depth and for that parse tree play a major role. Parse tree is a tree representation of different syntactic categories of a sentence. It helps us to understand the syntactical structure of a sentence.

from nltk import pos_tag, word_tokenize, RegexpParser

# Example text

sample_text = "The quick brown fox jumps over the lazy dog"

# Find all parts of speech in above sentence

tagged = pos_tag(word_tokenize(sample_text))

#Extract all parts of speech from any text

chunker = RegexpParser("""

NP: {<DT>?<JJ>*<NN>} #To extract Noun Phrases

P: {<IN>} #To extract Prepositions

V: {<V.*>} #To extract Verbs

PP: {<P> <NP>} #To extract Prepostional Phrases

VP: {<V> <NP|PP>*} #To extarct Verb Phrases

""")

# Print all parts of speech in above sentence

output = chunker.parse(tagged)

print("After Extracting\n", output)

FEATURE ENGINEERING:

When we use ML methods to perform our modeling step later, we’ll still need a way to feed this pre-processed text into an ML algorithm. Feature engineering refers to the set of methods that will accomplish this task. It’s also referred to as feature extraction. The goal of feature engineering is to capture the characteristics of the text into a numeric vector that can be understood by the ML algorithms.

two different approaches taken in practice for feature engineering in

classical NLP and traditional ML pipeline

Feature engineering is an integral step in any ML pipeline. Feature engineering steps convert the raw data into a format that can be consumed by a machine. These transformation functions are usually handcrafted in the classical ML pipeline, aligning to the task at hand. For example, imagine a task of sentiment classification on product reviews in e-commerce. One way to convert the reviews into meaningful “numbers” that helps predict the reviews’ sentiments (positive or negative) would be to count the number of positive and negative words in each review. There are statistical measures for understanding if a feature is useful for a task or not.

One of the advantages of handcrafted features is that the model remains interpretable—it’s possible to quantify exactly how much each feature is influencing the model prediction.

DL pipeline

In the DL pipeline, the raw data (after pre-processing) is directly fed to a model.The model is capable of “learning” features from the data. Hence, these features are more in line with the task at hand, so they generally give improved performance. But, since all these features are learned via model parameters, the model loses interpretability.

RECAP:

The first step in the process of developing any NLP system is to collect data relevant to the given task. Even if we’re building a rule-based system, we still need some data to design and test our rules. The data we get is seldom(rarely) clean, and this is where text cleaning comes into play. After cleaning, text data often has a lot of variations and needs to be converted into a canonical (principle or a pre-defined way) form. This is done in the pre-processing step. This is followed by feature engineering, where we carve out indicators that are most suitable for the task at hand.These indicators/features are converted into a format that is understandable by modeling algorithms.

1. Notes are compiled from Practical Natural Language Processing: A Comprehensive Guide to Building Real-World NLP Systems, GeeksforGeeks, tutorialspoint-Stemming and Lemmatization, gfg-parse tree,GeeksforGeeks-Language detection, morioh and Medium-Tokenization and Parts of Speech(POS) Tagging in Python’s NLTK library↩

2. If you face any problem or have any feedback/suggestions feel free to comment.↩