Topic 02.1: Building an NLP Pipeline(PART-1)

If we were asked to build an NLP application, think about how we would approach doing so at an organization. We would normally walk through the requirements and break the problem down into several sub-problems, then try to develop a step-by-step procedure to solve them. Since language processing is involved, we would also list all the forms of text processing needed at each step.

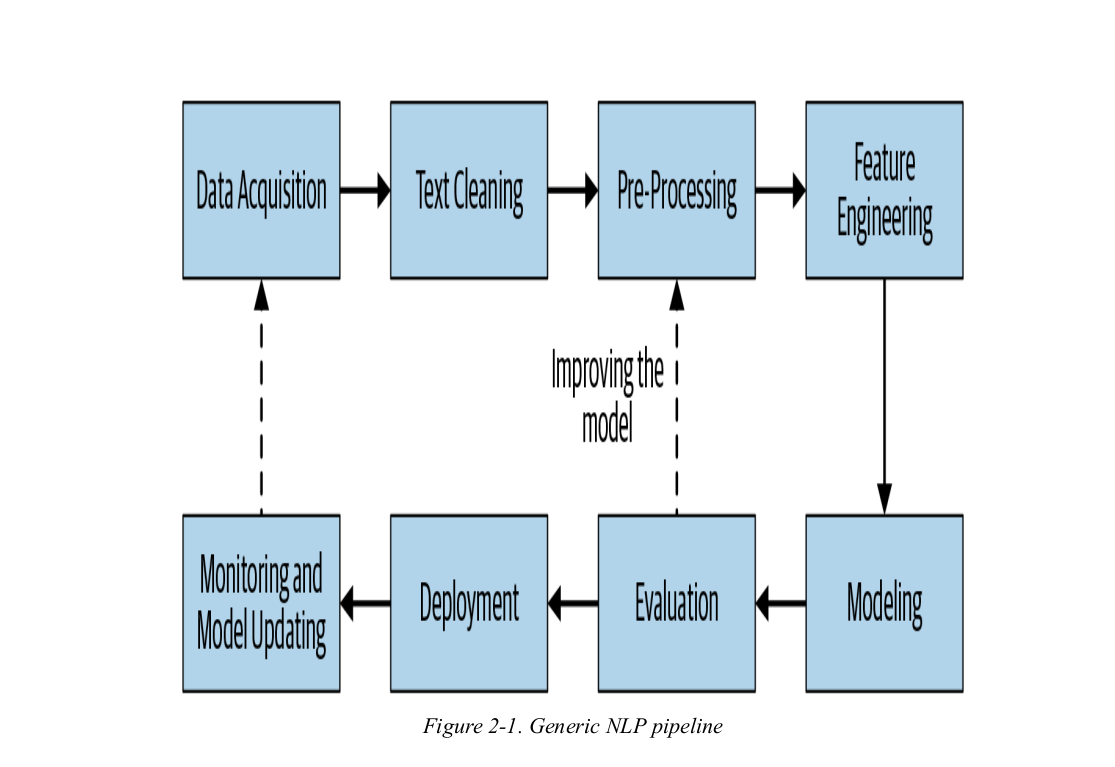

This step-by-step processing of text is known as a NLP pipeline. It is the series of steps involved in building any NLP model.

The key stages in the pipeline are as follows:

-

Data acquisition

-

Text cleaning

-

Pre-processing

-

Feature engineering

-

Modeling

-

Evaluation

-

Deployment

-

Monitoring and model updating

Before we dive into NLP applications implementation the first and foremost thing is to get a clear picture about it’s pipeline. Hence, below are a detail overview about each component in it's pipeline.

DATA ACQUISITION

Data plays a major role in the NLP pipeline. Hence it's quite important that how we collect the relevant data for our NLP project.

Sometime it's easily available to us. But sometime extra effort need to be done to collect these precious data.

1).Scrape web pages

To create an application that can summarizes the top news into just 100 words .For that you need to scrape the data from the current affairs websites and webpages.

2).Data Augmentation

NLP has a bunch of techniques through which we can take a small dataset and use some tricks to create more data. These tricks are also called data augmentation, and they try to exploit language properties to create text that is syntactically similar to source text data. They may appear as hacks, but they work very well in practice. Let’s look at some of them:

a).Back translation

Let say we have sentence s1 which is in French. We will translate it to other language (in this case English) and after translation it become sentence s2. Now we will translate this sentence s2 again to French and now it become s3. We’ll find that S1 and S3 are very similar in meaning but there is slight variations. Now we can add S3 to our dataset.

b).Replacing Entities

To create more dataset we will replace the entities name with other entities. Let say s1 is "I want to go to New York", here we will replace New York with other entity name for e.g. New Jersey.

c).Synonym Replacement

Randomly choose “k” words in a sentence that are not stop words. Replace these words with their synonyms.

d).Bigram flipping

Divide the sentence into bigrams. Take one bigram at random and flip it. For example: “I am going to the supermarket.” Here, we take the bigram “going to” and replace it with the flipped one: “to Going.”

TEXT CLEANING

After collecting data it is also important that data need to be in the form that is understood by computer. Consider the text contains different symbols and words which doesn't convey meaning to the model while training. So we will remove them before feeding to the model in an efficient way. This method is called Data Cleaning. Different Text Cleaning process are as follows:

HTML tag cleaning

Well when collecting the data we scrap through various web pages. Beautiful Soup and Scrapy, which provide a range of utilities to parse web pages.Hence the text we collect does not have any HTML tag in it.

from bs4 import BeautifulSoup

import urllib.request

import re

url = "https://en.wikipedia.org/wiki/Artificial_intelligence"

page = urllib.request.urlopen(url) # connect to website

try:

page = urllib.request.urlopen(url)

except:

print("An error occured.")

soup = BeautifulSoup(page, 'html.parser')

regex = re.compile('^tocsection-')

content_lis = soup.find_all('li', attrs={'class': regex})

content = []

for li in content_lis:

content.append(li.getText().split('\n')[0])

print(content)

Unicode Normalization:

While cleaning the data we may also encounter various Unicode characters, including symbols, emojis, and other graphic characters. To parse such non-textual symbols and special characters, we use Unicode normalization. This means that the text we see should be converted into some form of binary representation to store in a computer. This process is known as text encoding.

import emoji

text = emoji.emojize("Python is fun :red_heart:")

print(text)

Text = text.encode("utf-8")

print(Text)

Spelling Correction

The data that we have might have some spelling mistake because of fast typing the text or using short hand or slang that are used on social media like twitter. Using these data may not result in better prediction by our model therefore it is quite important to handle these data before feeding it to the model. we don’t have a robust method to fix this, but we still can make good attempts to mitigate the issue. Microsoft released a REST API that can be used in Python for potential spell checking.

System-Specific Error Correction

-

What if we need to extract the data from the PDF. Different PDF documents are encoded differently, and sometimes, we may not be able to extract the full text, or the structure of the text may get messed up. There are several libraries, such as PyPDF, PDFMiner, etc., to extract text from PDF documents but they are far from perfect.

-

Another common source of textual data is scanned documents. Text extraction from scanned documents is typically done through optical character recognition (OCR), using libraries such as Tesseract.

Recap

The first step in the process of developing any NLP system is to collect data relevant to the given task. Even if we’re building a rule-based system, we still need some data to design and test our rules. The data we get is seldom(rarely) clean, and this is where text cleaning comes into play.

1. Notes are compiled from Practical Natural Language Processing: A Comprehensive Guide to Building Real-World NLP Systems and morioh↩

2. If you face any problem or have any feedback/suggestions feel free to comment.↩