Topic 02.3: Building an NLP Pipeline(PART-3)

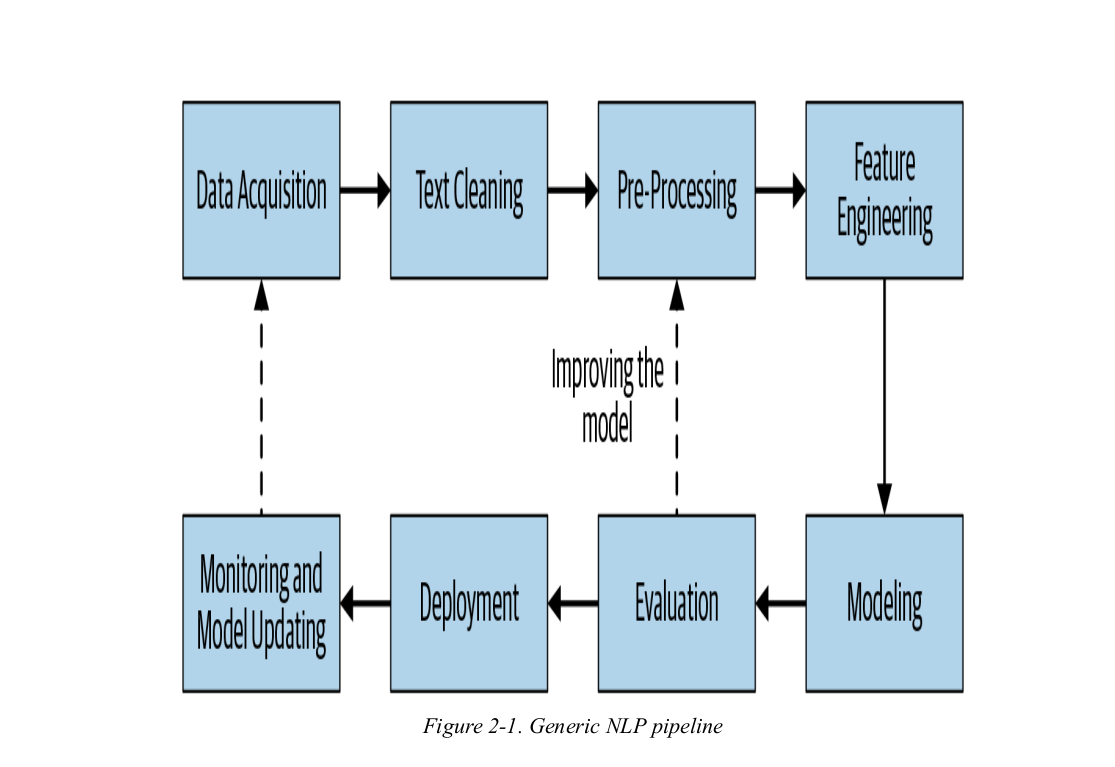

Let's resume our discussion on NLP pipeline. In the previous 2 blog post we completed the first 4 steps of NLP pipeline. In this blog post we will cover Modeling, Evaluation, Deployement and Monitoring and Model Updating.

Modeling

The next step is about how to build a useful solution out of this. At the start, when we have limited data, we can use simpler methods and rules. Over time, with more data and a better understanding of the problem, we can add more complexity and improve performance.There are different ways to create the model. They are as follows:-

Start with Simple Heuristics

At the very start of building a model, ML may not play a major role by itself. Part of that could be due to a lack of data, but human-built heuristics can also provide a great start in some ways.For instance, in email spam-classification tasks, we may have a blacklist of domains that are used exclusively to send spam. This information can be used to filter emails from those domains. Similarly, a blacklist of words in an email that denote a high chance of spam could also be used for this classification.

Another popular approach to incorporating heuristics in your system is using regular expression. Stanford NLP’s TokensRegex and spaCy’s rule-based matching are two tools that are useful for defining advanced regular expressions to capture other information.

Building Your Model

While a set of simple heuristics is a good start, as our system matures,adding newer and newer heuristics may result in a complex, rule-based system. Such a system is hard to manage, and it can be even harder to diagnose the cause of errors. We need a system that’s easier to maintain as it matures. Further, as we collect more data, our ML model starts beating pure heuristics. At that point, a common practice is to combine heuristics directly or indirectly with the ML model.There are two broad ways of doing that:

a). Create a feature from the heuristic for your ML model

When there are many heuristics where the behavior of a single heuristic is deterministic but their combined behavior is fuzzy in terms of how they predict, it’s best to use these heuristics as features to train your ML model. For instance, in the email spam-classification example, we can add features, such as the number of words from the blacklist in a given email or the email bounce rate, to the ML model.

b).Pre-process your input to the ML model

If the heuristic has a really high prediction for a particular kind of class, then it’s best to use it before feeding the data in your ML model. For instance, if for certain words in an email, there’s a 99% chance that it’s spam, then it’s best to classify that email as spam instead of sending it to an ML model.

Building The Model

After following the baseline of modeling starting from simple Heuristics followed by Building your model the final step is to build "THE MODEL" that gives good performance and is also production-ready. For that we may have to do many iterations of the model-building process.We cover some of the approaches to address this issue here:

1.Ensemble and stacking

a common practice is not to have a single model, but to use a collection of ML models, often dealing with different aspects of the prediction problem. There are two ways of doing this: we can feed one model’s output as input for another model, thus sequentially going from one model to another and obtaining a final output. This is called model stacking. Alternatively, we can also pool predictions from multiple models and make a final prediction. This is called model ensembling.

2.Better feature engineering

A better feature engineering step may lead to better performance. For instance, if there are a lot of features, then we use feature selection to find a better model. Detail about feature engineering will be coverd in future topic.

3.Transfer learning

Transfer learning is a machine learning technique where a model trained on one task is re-purposed on a second related task. As an example, for email spam classification, we can use BERT to fine-tune the email dataset.

4.Reapplying heuristics

No ML model is perfect. Hence, ML models still make mistakes.It’s possible to revisit these cases again at the end of the modeling pipeline to find any common pattern in errors and use heuristicsto correct them. We can also apply domain-specific knowledge that is not automatically captured in the data to refine the model predictions.

Evaluation

A key step in the NLP pipeline is to measure how good the model we’ve built is.Success in this phase depends on two factors:

- using the right metric for evaluation

- following the right evaluation process.

evaluations are of two types: intrinsic and extrinsic. Intrinsic focuses on intermediary objectives, while extrinsic focuses on evaluating performance on the final objective. For example, consider a spam-classification system. The ML metric will be precision and recall, while the business metric will be “the amount of time users spent on a spam email.” Intrinsic evaluation will focus on measuring the system performance using precision and recall. Extrinsic evaluation will focus on measuring the time a user wasted because a spam email went to their inbox or a genuine email went to their spam folder.

Deployment

An NLP model can only begin to add value to an organization when that model’s insights routinely become available to the users for which it was built. The process of taking a trained model and making its predictions available to users or other systems is known as deployment. Various cloud services like AWS,Azure make deployement quite easy and effective. More detials will be covered in the future tutorials.

Monitoring and Model Updating

Monitoring

the model performance is monitored constantly after deploying. Monitoring for NLP projects and models has to be handled differently than a regular engineering project, as we need to ensure that the outputs produced by our models daily make sense. If we’re automatically training the model frequently, we have to make sure that the models behave in a reasonable manner.

Model Updating

Once the model is deployed and we start gathering new data, we’ll iterate the model based on this new data to stay current with predictions.

Detialed and practical implementation will be covered as we will move forward with the topics.